OPENAI // GPT-3.5 // GPT-4 // BING AI // MIDJOURNEY // ADDITIVE PROMPTING

Deconstructing Kirby: GPT-3.5 vs. GPT-4

Design schemas from classic Kirby covers with ChatGPT and Bing AI.

This post describes a chatbot sequence I ran twice, the day before and the day after GPT-4 was released (ie. Monday and Tuesday.) The “Additive Prompting” hack it’s built around is clever enough to mention on its own, but the GPT-4 before-and-afters really earn it this screengrab tour.

The demo I’d bookmarked (by Linus Ekenstam) is designed to generate ornate Midjourney prompts, but that wasn’t the part I was interested in. It was instead the LLM prompt concept itself — forcing elaborate texts by populating a categorical list into a table, and then back into plain text—that struck me as worth testing right away. (I’m a sucker for Markdown-table-generating prompts as of late.)

Running my madlibs through both Bing and OpenAI was illuminating enough that I’d screenshot everything on Monday, but with GPT-4 unexpectedly deploying to Bing yesterday morning, an extended comparison was suddenly mandatory. Here’s how all three interactions transpired, in order:

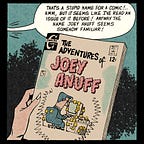

Instead of a “food photography” and “hamburger,” I went with “a classic comic book cover” and “Jack Kirby.” And OpenAI’s ChatGPT web interface, driven by Monday’s GPT-3.5 version, met most of my minimum standards for task completion: describing each Kirby cover in sufficient detail to suggest a vaguely reminiscent visualization (if one was inclined to screw around with Midjourney all day.)

Monday’s Bing AI immediately outwrote Monday’s ChatGPT in descriptive output, supplying per-character pose details for each character and specific notes on perspective, costumes, and typography. (None overly correctly.)

And finally Tuesday’s next-generation Bing AI with GPT-4: richer detail and more footnotes, more cleanly executes transformations, but still hallucinates like Hunter S. Thompson. Which will continue to be more than enough for some tasks, and nowhere close to enough on others, I guess?

And I still wouldn’t paste this stuff into Midjourney or Stable Diffusion, not because of the nonsense descriptions but because gimme a break. Actually, if it were better articulating real design elements that’d be a lot more tempting to GPU, although I’m sure it’d still look like hell. Here’s the plain-text if you want to try:

Draw a comic book cover for Fantastic Four #1 from 1961. The cover should

show the four members of the Fantastic Four using their powers to fight

a giant green monster with yellow eyes and horns that emerges from the

street. Mr. Fantastic should stretch his arm to grab the monster’s horn,

Invisible Girl should turn invisible and run away from the monster, Human

Torch should fly in the air and shoot flames at the monster, and Thing

should lift a car over his head and throw it at the monster. The title

should be in large yellow letters at the top of the cover and the captions

should be in red boxes at the bottom. The background should show a city

street with cars and people fleeing in panic. The cover should have a

vintage style and look like it was drawn by Jack Kirby.

Draw a comic book cover for The Incredible Hulk #1 from 1962. The cover

should show Hulk in his original gray color lifting a jeep over his head

while Rick Jones tries to calm him down. Hulk should look angry and

muscular while Rick Jones should look scared and helpless. The title

should be in green letters at the top of the cover and the captions

should be in yellow boxes at the bottom. The background should show a

desert base with tanks and helicopters. The cover should have a vintage

style and look like it was drawn by Jack Kirby.Anyways, when OpenAI and MSFT unveil their multimodal interfaces, this whole tabular approach to detail accumulation probably becomes obsolete, but until then I’m gonna keep it in mind: ask the LLM to itemize the key elements of some thing, and then have that LLM populate examples according to its own schema.

It should be acknowledged that before Linus tweeted this prompt chain Monday, Nick St. Pierre had tweeted a similar approach as “Additive Prompting” on Sunday (which I’d also bookmarked.) The major difference between their approaches is that Nick supplies his own hand-crafted schema, while Linus lets the LLM generate it.

Please create a table that breaks down an interior architecture

photograph composition into the following key elements, where each of

these key elements is a column:

Composition, Camera Angle, Style, Room Type, Focal Point, Textures,

Detail, Color Palette, Brand, Lighting, Location, Time of Day, Mood,

Architecture

Fill the table with 10 rows of data, where:

Composition = "Editorial Style photo"

Room Type = "Living Room"Speaking of Nick’s great work, check out this Midjourney thread of his from last week. Vivid stuff for so tiny a prompt, I was impressed! “Children are TERRIFIED of Balloons!!” should be a real poster campaign.

-Joey Anuff